Kerberos-给CDH集群添加Kerberos认证

前言

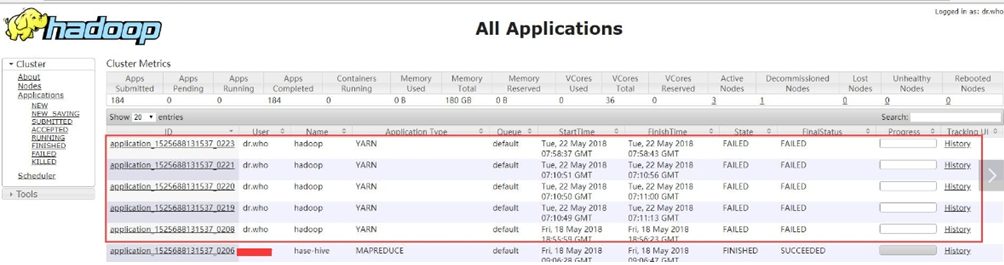

- 近期网络曝出通过Hadoop Yarn资源管理系统未授权访问漏洞从外网进行攻击内部服务器并植入挖矿木马的行为和自动化脚本的产生。此次事件主要因Hadoop YARN 资源管理系统配置不当,导致可以未经授权进行访问,从而被攻击者恶意利用。攻击者无需认证即可通过REST API部署任务来执行任意指令,最终完全控制服务器。被攻击后的特征在Hadoop Yarn的管理页面可看到,是用dr.who创建了多个任务:

- 内部修复建议其中一点,就是需要在集群中启动”Kerberos“认证,正式环境我已近启用了”Kerberos“,下面是测试环境的启用操作日志记录,整理后写下该博文:

安装Kerberos服务

准备

对于Kerberos的介绍这里就不多说了,直接进入主题吧,首先我们选择一台服务器安装Kerberos的核心服务master KDC,其他节点安装Kerberos client1

2

3

4

5

6

7主机名 角色

fetch-master master KDC

fetch-slave1 Kerberos client

fetch-slave2 Kerberos client

fetch-slave3 Kerberos client

fetch-slave4 Kerberos client

...

安装服务

- 我们选择fetch-master运行KDC,并在该主机上安装

krb5-server,krb-5libs,krb5-auth-dialog,krb5-workstation。

命令:yum install krb5-server krb5-libs krb5-auth-dialog krb5-workstation1

2

3

4

5

6

7

8

9

10

11

12[root@fetch-master ~]# yum install krb5-server krb5-libs krb5-auth-dialog krb5-workstation

...

Installed:

krb5-auth-dialog.x86_64 0:0.13-6.el6 krb5-workstation.x86_64 0:1.10.3-65.el6

Dependency Installed:

libkadm5.x86_64 0:1.10.3-65.el6

Dependency Updated:

krb5-devel.x86_64 0:1.10.3-65.el6 krb5-libs.x86_64 0:1.10.3-65.el6

Complete!

修改配置

修改配置文件,/etc/krb5.conf。

LOCAL.DOMAIN:是设定的realms,名字随意但注意后面相应的地方必须一致。修改日志目录,以及制定kdc、admin_server服务地址,如下:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23[root@fetch-master ~]# vim /etc/krb5.conf

[logging]

default = FILE:/app/log/krb5libs.log

kdc = FILE:/app/log/krb5kdc.log

admin_server = FILE:/app/log/kadmind.log

[libdefaults]

default_realm = LOCAL.DOMAIN

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

[realms]

LOCAL.DOMAIN = {

kdc = localhost

admin_server = localhost

}

[domain_realm]

.local.domain = LOCAL.DOMAIN

local.domain = LOCAL.DOMAIN修改配置文件,/var/Kerberos/krb5kdc/kadm5.acl。

匹配的用户和权限,下面就是默认以/admin@LOCAL.DOMAIN结尾有所有权限1

2[root@fetch-master ~]# vim /var/Kerberos/krb5kdc/kadm5.acl

*/admin@LOCAL.DOMAIN *修改配置文件, /var/Kerberos/krb5kdc/kdc.conf。

master_key_type和supported_enctypes默认使用aes256-cts。JAVA使用aes256-cts验证方式需要安装额外的jar包,下载Java Cryptography Extension (JCE) Unlimited Strength Jurisdiction Policy Files,下载解压后(local_policy.jar、US_export_policy.jar)放入$JAVA_HOME/jre/lib/security

也可以不使用aes256-cts,即把aes256-cts去掉,如下:1

2

3

4

5

6

7

8

9

10

11

12

13

14[root@fetch-master ~]# vim /var/Kerberos/krb5kdc/kdc.conf

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

LOCAL.DOMAIN = {

master_key_type = aes256-cts

max_renewable_life= 7d 0h 0m 0s

acl_file = /var/Kerberos/krb5kdc/kadm5.acl

dict_file = /app/share/dict/words

admin_keytab = /var/Kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}

创建/初始化Kerberos

创建/初始化Kerberos数据库,kdb5_util create -s –r LOCAL.DOMAIN ,并设置密码。

- [-s]表示生成stash file,并在其中存储master server key(krb5kdc);

- [-r]来指定一个realm name,当krb5.conf中定义了多个realm时才是必要的。

- 保存路径为/var/Kerberos/krb5kdc 如果需要重建数据库,将该目录下的principal相关的文件删除即可

1

2

3

4

5

6

7

8

9[root@fetch-master ~]# kdb5_util create –r LOCAL.DOMAIN -s

Loading random data

Initializing database '/var/Kerberos/krb5kdc/principal' for realm 'LOCAL.DOMAIN',

master key name 'K/M@LOCAL.DOMAIN'

You will be prompted for the database Master Password.

It is important that you NOT FORGET this password.

Enter KDC database master key:

Re-enter KDC database master key to verify:

kdb5_util: Unable to find requested database type while creating database '/var/Kerberos/krb5kdc/principal'

创建Kerberos的管理账号,并设置密码

1

2

3

4

5

6

7

8

9

10

11[root@fetch-master ~]# kadmin.local

Authenticating as principal root/admin@LOCAL.DOMAIN with password.

kadmin.local: add

add_policy add_principal addpol addprinc

kadmin.local: addprinc admin/admin@LOCAL.DOMAIN

WARNING: no policy specified for admin/admin@LOCAL.DOMAIN; defaulting to no policy

Enter password for principal "admin/admin@LOCAL.DOMAIN":

Re-enter password for principal "admin/admin@LOCAL.DOMAIN":

Principal "admin/admin@LOCAL.DOMAIN" created.

kadmin.local:

kadmin.local: exit

启动服务和测试

添加到开机启动,并启动服务krb5kdc和kadmin

1

2

3

4

5

6[root@fetch-master ~]# chkconfig krb5kdc on

[root@fetch-master ~]# chkconfig kadmin on

[root@fetch-master ~]# service krb5kdc start

正在启动 Kerberos 5 KDC: [确定]

[root@fetch-master ~]# service kadmin start

正在启动 Kerberos 5 Admin Server: [确定]测试服务

1

2

3

4

5

6

7

8

9[root@fetch-master ~]# kinit admin/admin@LOCAL.DOMAIN

Password for admin/admin@LOCAL.DOMAIN:

[root@fetch-master ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: admin/admin@LOCAL.DOMAIN

Valid starting Expires Service principal

05/28/18 15:20:04 05/29/18 15:20:04 krbtgt/LOCAL.DOMAIN@LOCAL.DOMAIN

renew until 06/04/18 15:20:04

安装Kerberos客户端

给集群所有节点安装Kerberos客户端:

命令:yum -y install krb5-workstation krb5-libs krb5-auth-dialog

(因为我测试环境,KDC与CM在同一节点我就不用在CM上安装了上面已近安装,如果没在同一节点记得CM也要安装这些)1

2

3

4

5

6

7

8

9

10

11

12

13

14

15[root@fetch-slave1 ~]# yum -y install krb5-workstation krb5-libs krb5-auth-dialog

Installed:

krb5-workstation.x86_64 0:1.10.3-65.el6

Dependency Installed:

libkadm5.x86_64 0:1.10.3-65.el6

Updated:

krb5-libs.x86_64 0:1.10.3-65.el6

Dependency Updated:

krb5-devel.x86_64 0:1.10.3-65.el6

Complete!CM节点安装额外组件,

命令:yum -y install openldap-clients1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19root@fetch-master ~]# yum -y install openldap-clients

Running Transaction Test

Transaction Test Succeeded

Running Transaction

Updating : openldap-2.4.40-16.el6.x86_64 1/3

Installing : openldap-clients-2.4.40-16.el6.x86_64 2/3

Cleanup : openldap-2.4.23-31.el6.x86_64 3/3

Verifying : openldap-clients-2.4.40-16.el6.x86_64 1/3

Verifying : openldap-2.4.40-16.el6.x86_64 2/3

Verifying : openldap-2.4.23-31.el6.x86_64 3/3

Installed:

openldap-clients.x86_64 0:2.4.40-16.el6

Dependency Updated:

openldap.x86_64 0:2.4.40-16.el6

Complete!拷贝配置文件,将KDC Server上的krb5.conf文件拷贝到所有Kerberos客户端(集群所有节点)

1

2

3

4

5

6

7#记得指定对的admin_server 、 default_domain

#我上面都是localhost,所以需要修改为fectch-master

scp -P 2222 /etc/krb5.conf fetch-slave1:/etc/

scp -P 2222 /etc/krb5.conf fetch-slave2:/etc/

scp -P 2222 /etc/krb5.conf fetch-slave3:/etc/

scp -P 2222 /etc/krb5.conf fetch-slave4:/etc/

......

CDH集群启用Kerberos

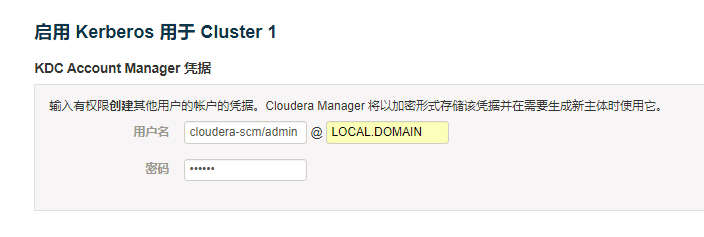

在KDC中给Cloudera Manager添加管理员账号,并设置密码。

1

2

3

4

5

6

7

8

9

10root@fetch-master ~]# kadmin.local

Authenticating as principal admin/admin@LOCAL.DOMAIN with password.

kadmin.local: add

add_policy add_principal addpol addprinc

kadmin.local: addprinc cloudera-scm/admin@LOCAL.DOMAIN

WARNING: no policy specified for cloudera-scm/admin@LOCAL.DOMAIN; defaulting to no policy

Enter password for principal "cloudera-scm/admin@LOCAL.DOMAIN":

Re-enter password for principal "cloudera-scm/admin@LOCAL.DOMAIN":

Principal "cloudera-scm/admin@LOCAL.DOMAIN" created.

kadmin.local: exitCDH启用Kerberos

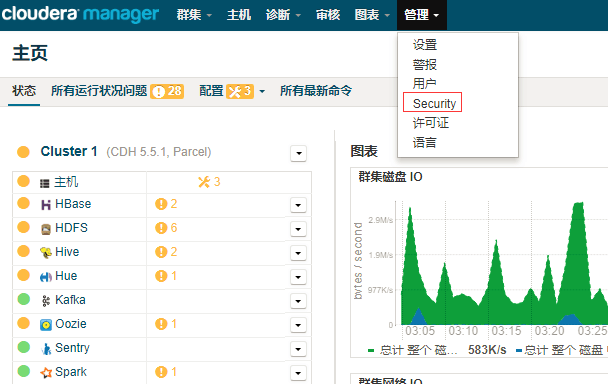

2.1. 进入Cloudera Manager的“管理”-> “Security”界面->启用Kerberos

2.2. 检查信息,勾选

2.3. 配置KDC信息

2.4. 不建议让Cloudera Manager来管理krb5.conf,点击“继续”

2.5. 输入CM的Kerbers管理员账号

2.6. Kerberos主体

2.7. 重启集群

测试&操作

先开看几个命令:

klist: 查看当前的认证用户

kinit: 进行验证

kadmin.local: 直接登录

addprinc: 添加用户,在kadmin.local下面

delprinc: 删除用户,在kadmin.local下面

modprinc: 修改用户,在kadmin.local下面

listprincs:列出用户,在kadmin.local下面

kinit –R: 更新ticket

kdestroy: 删除当前的认证的缓存示例1:登录到管理员账户: 如果在本机上,可以通过kadmin.local直接登录。其它机器的,先使用kinit进行验证。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30#登陆管理账户

[root@fetch-master ~]# kadmin.local

Authenticating as principal root/admin@LOCAL.DOMAIN with password.

kadmin.local: exit

#查看当前的认证用户

[root@fetch-master ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: root@LOCAL.DOMAIN

Valid starting Expires Service principal

05/28/18 17:43:12 05/29/18 17:43:12 krbtgt/LOCAL.DOMAIN@LOCAL.DOMAIN

renew until 06/04/18 17:43:1

#其他节点-通过kinit验证

[root@fetch-slave1 hbase]# kinit admin/admin

Password for admin/admin@LOCAL.DOMAIN:

#其它节点-查看当前认证用户

[root@fetch-slave1 hbase]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: admin/admin@LOCAL.DOMAIN

Valid starting Expires Service principal

05/28/18 18:07:26 05/29/18 18:07:26 krbtgt/LOCAL.DOMAIN@LOCAL.DOMAIN

renew until 06/04/18 18:07:26

#如果不认证,会有如下异常:

FATAL ipc.AbstractRpcClient: SASL authentication failed. The most likely cause is missing or invalid credentials. Consider 'kinit'.

javax.security.sasl.SaslException:

GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]示例2:销毁后就无法查看,重新认证后查看

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18[root@fetch-slave2 ~]# kdestroy ##销毁

You have mail in /var/spool/mail/root

[root@fetch-slave2 ~]# klist

klist: No credentials cache found (ticket cache FILE:/tmp/krb5cc_0)

[root@fetch-slave2 ~]# hadoop fs -ls / ##销毁后就无法查看

18/05/29 17:22:26 WARN security.UserGroupInformation: PriviledgedActionException as:root (auth:KERBEROS) cause:javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]

18/05/29 17:22:26 WARN ipc.Client: Exception encountered while connecting to the server : javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]

18/05/29 17:22:26 WARN security.UserGroupInformation: PriviledgedActionException as:root (auth:KERBEROS) cause:java.io.IOException: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]

ls: Failed on local exception: java.io.IOException: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]; Host Details : local host is: "fetch-slave2/10.141.4.203"; destination host is: "fetch-master":8020;

[root@fetch-slave2 ~]# kinit admin/admin ##重新认证后,就能操作

Password for admin/admin@LOCAL.DOMAIN:

[root@fetch-slave2 ~]# hadoop fs -ls /

Found 5 items

drwxrwxrwx - hdfs supergroup 0 2017-06-22 16:39 /data

drwx------ - hbase hbase 0 2018-05-28 17:08 /hbase

drwxr-xr-x - hdfs supergroup 0 2017-01-16 18:03 /system

drwxrwxrwt - hdfs supergroup 0 2018-01-09 11:06 /tmp

drwxrwxrwx - hdfs supergroup 0 2018-05-28 18:38 /user示例3:运行hive,然后让他在Yarn上跑job

4.1. 直接使用admin凭证,会有如下异常,因为系统上没有admin用户1

2

3

4

5

6

7Application application_1527494654301_0004 failed 2 times due to AM Container for appattempt_1527494654301_0004_000002 exited with exitCode: -1000

For more detailed output, check application tracking page:http://fetch-master:8088/proxy/application_1527494654301_0004/Then, click on links to logs of each attempt.

Diagnostics: Application application_1527494654301_0004 initialization failed (exitCode=255) with output: main : command provided 0

main : run as user is admin

main : requested yarn user is admin

User admin not found

Failing this attempt. Failing the application.

4.2. 运行任务,出现如下异常,用户id小于10001

2

3

4

5

6

7Application application_1527494654301_0003 failed 2 times due to AM Container for appattempt_1527494654301_0003_000002 exited with exitCode: -1000

For more detailed output, check application tracking page:http://fetch-master:8088/proxy/application_1527494654301_0003/Then, click on links to logs of each attempt.

Diagnostics: Application application_1527494654301_0003 initialization failed (exitCode=255) with output: main : command provided 0

main : run as user is yarn

main : requested yarn user is yarn

Requested user yarn is not whitelisted and has id 488,which is below the minimum allowed 1000

Failing this attempt. Failing the application.

解决方法:

4.2.1. 修改一个用户的user id :usermod -u

4.2.2. 修改Clouder关于这个该项的设置 :ARN -> NodeManager -> Security -> min.user.id改为0。

4.3. 使用yarn用户运行,出现用户被禁用的异常,需要在CM yarn服务页面,配置禁止的系统用户banned.users列表1

2

3

4

5

6

7Application application_1527590116657_0001 failed 2 times due to AM Container for appattempt_1527590116657_0001_000002 exited with exitCode: -1000

For more detailed output, check application tracking page:http://fetch-master:8088/proxy/application_1527590116657_0001/Then, click on links to logs of each attempt.

Diagnostics: Application application_1527590116657_0001 initialization failed (exitCode=255) with output: main : command provided 0

main : run as user is yarn

main : requested yarn user is yarn

Requested user yarn is banned

Failing this attempt. Failing the application.

4.4. 使用allowed.system.users允许的用户查询,例如hive,先添加一个用户,再运行,成功!1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40##添加用户

[root@fetch-slave3 ~]# kadmin

Authenticating as principal admin/admin@LOCAL.DOMAIN with password.

Password for admin/admin@LOCAL.DOMAIN:

kadmin: addprinc hive/admin@LOCAL.DOMAIN

WARNING: no policy specified for hive/admin@LOCAL.DOMAIN; defaulting to no policy

Enter password for principal "hive/admin@LOCAL.DOMAIN":

Re-enter password for principal "hive/admin@LOCAL.DOMAIN":

Principal "hive/admin@LOCAL.DOMAIN" created.

kadmin: exit

##然后再查询,运行成功

[root@fetch-slave3 ~]# kinit hive/admin

[root@fetch-slave3 ~]# hive

##运行SQL,让它跑mapreduce

hive> select count(1) from test11;

Query ID = root_20180529185252_84fdbaf2-6b3a-4470-b823-dda693865b54

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1527590116657_0002, Tracking URL = http://fetch-master:8088/proxy/application_1527590116657_0002/

Kill Command = /opt/cloudera/parcels/CDH/lib/hadoop/bin/hadoop job -kill job_1527590116657_0002

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2018-05-29 18:52:48,536 Stage-1 map = 0%, reduce = 0%

2018-05-29 18:52:58,874 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.33 sec

2018-05-29 18:53:05,081 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 2.74 sec

MapReduce Total cumulative CPU time: 2 seconds 740 msec

Ended Job = job_1527590116657_0002

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 2.74 sec HDFS Read: 6800 HDFS Write: 3 SUCCESS

Total MapReduce CPU Time Spent: 2 seconds 740 msec

OK

18

Time taken: 71.793 seconds, Fetched: 1 row(s)

4.5. 使用普通用户运行job,这里以xuandongtang为例。

4.5.1. 在Kerberos上创建该用户,然后kinit验证用户

4.5.2. 随后就可以运行(注:集群所有节点必须有该用户不然会报错User xuandongtang not found)1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39[root@fetch-slave3 ~]# kadmin

Authenticating as principal lidongxiao/admin@LOCAL.DOMAIN with password.

Password for lidongxiao/admin@LOCAL.DOMAIN:

kadmin: addprinc xuandongtang/admin@LOCAL.DOMAIN

WARNING: no policy specified for xuandongtang/admin@LOCAL.DOMAIN; defaulting to no policy

Enter password for principal "xuandongtang/admin@LOCAL.DOMAIN":

Re-enter password for principal "xuandongtang/admin@LOCAL.DOMAIN":

Principal "xuandongtang/admin@LOCAL.DOMAIN" created.

kadmin: exit

[root@fetch-slave3 ~]# kinit xuandongtang/admin@LOCAL.DOMAIN

Password for xuandongtang/admin@LOCAL.DOMAIN:

##运行成功!

[root@fetch-slave3 ~]# hive

hive>

> select count(1) from test11;

Query ID = root_20180530161717_62a841bc-6a27-45b7-9901-f97c7c8c3916

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1527590116657_0006, Tracking URL = http://fetch-master:8088/proxy/application_1527590116657_0006/

Kill Command = /opt/cloudera/parcels/CDH/lib/hadoop/bin/hadoop job -kill job_1527590116657_0006

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2018-05-30 16:18:19,573 Stage-1 map = 0%, reduce = 0%

2018-05-30 16:18:27,805 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.3 sec

2018-05-30 16:18:37,097 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 2.79 sec

MapReduce Total cumulative CPU time: 2 seconds 790 msec

Ended Job = job_1527590116657_0006

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 2.79 sec HDFS Read: 6830 HDFS Write: 3 SUCCESS

Total MapReduce CPU Time Spent: 2 seconds 790 msec

OK

18

Time taken: 71.835 seconds, Fetched: 1 row(s)